How to Validate Mobile App Features Before Building Them

Experienced mobile app builders know that you should run quantitative A/B tests on features after you’ve built them, so that you can optimize their performance accordingly and drive value for your business. But what if there was a way to validate a mobile app feature idea before building it? If you could do that, you could confirm that your proposed feature creates value for your users before you invest significant design and engineering effort.

Well, there’s a way to do exactly that! It’s called the “fake door test.”

What is a Fake Door Test?

A fake door test is when you show the visual entry point into the feature that you’re planning on building, but you haven’t yet built out the rest of the feature.

The reason it’s called a fake door test is because the entry point is fully functional (a.k.a. the door), but the rest of the functionality doesn’t exist yet, so it’s a door to “nowhere”, so to speak.

What Do I Need to Execute a Fake Door Test?

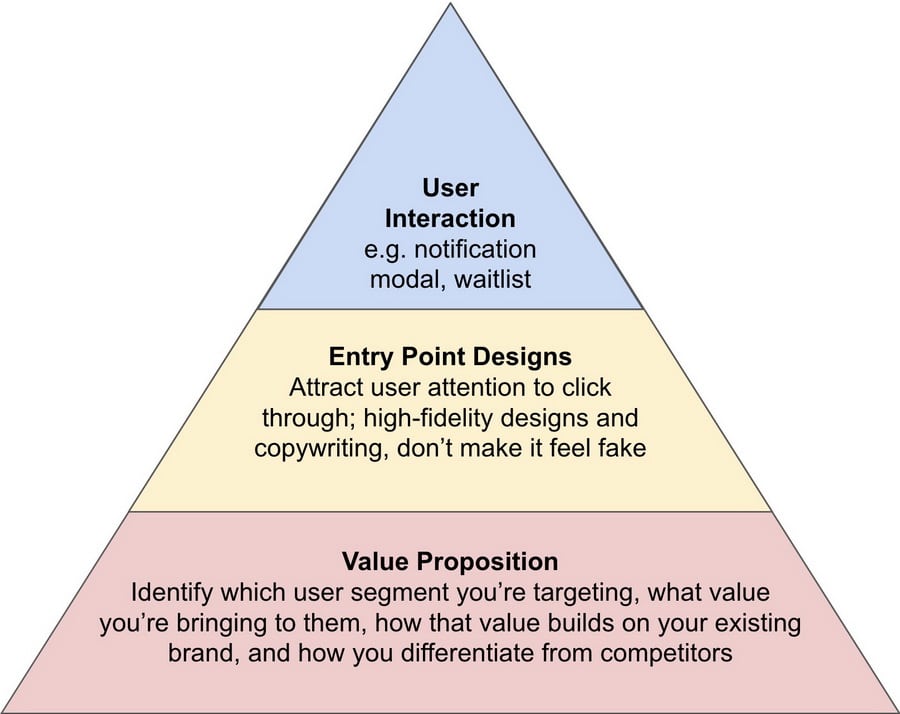

To properly execute a fake door test, you’ll need the following three things:

- A crisp understanding of the value proposition of your feature, so that you market it appropriately.

- Designs and copywriting for the entry point of your feature, so that users are actually interested in clicking through the entry point.

- A user interaction after a user clicks through the entry point, to let users know that the feature doesn’t yet exist; one popular implementation is to use a notification modal.

Why are Fake Door Tests Valuable?

What’s the value of using fake door tests? With fake door tests, you’re able to quantifiably measure user intent through click through rates.

Fake door tests work at a broader scale than 1:1 qualitative interviews, which take significant time to set up and run. You can easily deploy a fake door test across a given percentage of your mobile app user base, and you can scale that percentage up or down to whatever best suits your needs with zero additional development work.

Fake door tests also work at higher fidelity than qualitative interviews because they track actual user behavior rather than hypothetical user decisions. In qualitative interviews, users aren’t necessarily interacting with the product in its native context; instead, they’re interacting with a moderator who’s guiding their thoughts and actions. But with fake doors tests, users are using the product in their natural day-to-day behavior, so you get a much stronger signal on how user behavior will likely change once the feature is implemented.

Most interesting of all, with fake door tests, you don’t have to build out the rest of the feature! You only need to build the visual entry point and the interaction that comes right after a user clicks through the entry point.

That means that fake door tests are particularly valuable for high-cost functionality such as:

- Switching from a list view to a tile view for search results (e.g. shopping, real estate search, dating apps.)

- Localization to provide support in different geographies and different languages.

- In-depth integrations with third parties (e.g. CRM integration, partner marketplace integration.)

In other words, don’t use fake door tests if the rest of the feature is low-cost to build.

If you’re just giving people the ability to sign up for a newsletter, there’s no real need to use a fake door test, since newsletter mailing lists are low-cost to implement.

Similarly, if you’re considering having people opt in for a new kind of push notification, there’s no need to use a fake door test – just create the new kind of push notification and measure how users are doing in an A/B test.

What are the Downsides of Using a Fake Door Test?

Say that you do decide that it’s worth it to run the fake door test, because the rest of the feature is significant work to build. If that’s the case, you need to be careful with your implementation of a fake door test! You don’t want to anger users when you present them with a non-existent feature.

It’s crucial that you introduce copywriting that gently lets them down after they’ve clicked through the fake door. Be empathetic – people clicked on the feature because they expected it to solve a pain of theirs. Now that they’ve found out the feature doesn’t exist, their pain is likely to be aggravated further.

In other words, the tradeoff of the fake door test is that you’re trading development effort for user trust in your product. The fake door test works best when your audience is mostly early adopters, because early adopters are more forgiving when it comes to being scrappy. In fact, some early adopters might be excited that you’re using fake door testing to validate your features before implementing them, because it means that you’re actively seeking to learn from their interests.

But, if your audience is mass-market users, a fake door test will likely turn them away from you permanently. Mass-market users have no patience for scrappiness; they expect the user experience to be as flawless and reliable as any other app, and showing them an incomplete feature will cause them to seek out alternative apps rather than continue to use yours.

That’s why fake door tests shouldn’t be rolled out to 100% of your user base. Try to keep the experiment as limited as possible so that you still get enough signal to make a decision one way or the other, but try not to drive all of your traffic to engage with the fake door.

Note that there are times when your organization should never use fake door tests. When your company has a lot of media coverage (e.g. Facebook, Amazon, Google, Uber) you’ll want to steer away from using fake door tests. That’s because media organizations might put a spotlight on your practices and misinterpret your actions, when you had no ill intent. It’s much better to leverage fake door tests when you’re still a small, scrappy startup that’s still looking to achieve product/market fit with early adopters.

Once you’re a large enough organization, the development effort that you’d save through a fake door test isn’t worth the user trust that you might lose. On the flip side, if you’re a small startup, the development effort that you’d save might make or break your company as you continue to drive towards product/market fit.

How Do I Mitigate the Downsides of a Fake Door Test?

Once your user has interacted with the fake door, be honest and transparent.

Let them know that you’re actively assessing user interest in the feature and that you’re considering building it. Here, try to give the user some value as well – provide them a place to put their email so they can sign up on a waitlist, where they’ll be the first to be notified as soon as you release the feature.

Here’s some example language to let people know what’s happening: “Unfortunately, we’re still actively researching this feature, and it’s not yet available for use. If you’d like to stay informed about how this feature is progressing, sign up on our waitlist.”

Of course, be sure to edit the message above to fit your brand and your tone!

Furthermore, depending on the behavior of your user base, you might also provide them with a small gift for the inconvenience, such as a coupon or a small giveaway. That said, that only works for small user bases.

You don’t want to connect “interacting with the fake door” with “getting a freebie” if you’re a large organization, because your users might inform each other to game the system and get freebies without actually wanting to use the feature itself – and that’ll throw off your test results entirely!

A Real-World Example of Using Fake Door Tests

I’ve used this method to validate the value of an “unarchive lead” functionality in a real estate agent CRM mobile app, using less than a week of engineering effort.

First, some context on the problem: for our CRM app, we already had an archive functionality where real estate agents can hide inactive leads from view. Most of the time, once the lead is archived, the agent never needs to interact with it again. However, we had been hearing qualitative feature requests to unarchive leads, because some consumers do reengage with their assigned real estate agent even after months of inactivity.

The challenge for us was that unarchiving a lead is significant work, and it wasn’t something we can do without first ensuring that it was the right thing to do. Essentially, it would be an irreversible decision, for a couple of reasons.

First, unarchiving a lead requires us to rethink the way that leads are presented in the leads list view for real estate agents. Is it still valid to sort by “lead accepted date” if there’s a lead that was accepted in February but was unarchived in October? The whole point of “lead accepted date” is to gain an understanding of how fresh the lead is, since stale leads are less likely to transact. But, a resurrected unarchived lead has a much higher likelihood to transact than an archived lead, even if they both had the same lead accepted date.

Second, unarchived leads are problematic for CRMs because it’s not clear how to measure the progression of the lead as it moves through various lifecycle stages. Creating a whole new lead is cleaner from a data perspective, because the original lead really did move into “closed lost”, and the new inquiry from the same customer later on is really a whole new transaction that’s starting from the initial stages of prospecting.

That said, the underlying pain for our agents was that they hated having to create a new lead from a record that they already had created in the past. They wanted to be able to keep all of the notes they had from the lead, they wanted to see all of the past activity that they had, and they didn’t want to duplicate the existing lead into a new record because it would interfere with our search capabilities in the app.

So, to ensure that we made the right call, we first ran a fake door test in our CRM mobile app. We surfaced up a new “unarchive” button that only appeared in the “detail view” when clicking into an archived lead. This narrowed down the potential user base to the population who might use such an unarchive functionality, rather than trying to surface the feature to the entire population.

When agents clicked the button, we surfaced a pop up letting them know that the feature didn’t exist yet but that we were gauging interest. Because these agents were fellow employees at our company, we didn’t need to use a waitlist – after all, we had user IDs and emails at our fingertips.

We saw a significant portion of agents try to click through this functionality. In fact, we saw significantly more demand than we expected based off of feature request volume. That told us that we needed to prioritize the unarchive functionality higher.

We were well-served by our fake door test because it validated the demand and helped us prioritize the work. While my story is a happy one, it helps to think about what the opposite situation might have looked like. What if we had not run the fake door test?

If we had made the irreversible decision to build the unarchive functionality, and it turned out that real estate agents didn’t need it, that would have caused multiple long-term problems. First, our users would have to deal with the mental overhead of working with low-value functionality in their CRM, which interferes with the real work of actually working with their customers. Second, it would have created unnecessary maintenance work for our engineering team – any feature that you ship has to be maintained, so it’s crucial to ensure that the feature is actually valuable. Third, we would have squandered valuable space on the screen, which would have limited our design team’s future ability to craft excellent user experiences.

Summary

Fake door tests are a valuable, low-engineering method to quickly assess the demand for a new feature in a high-fidelity and scalable way. However, the tradeoff is that you might cause users to lose trust in you if you don’t implement the fake door in a compassionate and empathetic way.

By taking advantage of this validation method while you’re still scrappy and working with early adopter users, you’ll make the most of limited engineering resources and significantly increase your chances of achieving product/market.